Can AI Be Trained to Spot Deepfakes Made by Other AI?

In the age of synthetic media, what’s real is no longer obvious. Deep Fakes. Once the stuff of sci-fi, are now accessible, hyper-realistic, and alarmingly easy to create thanks to generative AI. But as these fake videos and voices flood our feeds, a high-stakes question arises: Can we fight AI with AI?

The answer may define the future of trust online.

This blog explores the cutting edge of “AI-on-AI detection”, where machine learning systems are being trained not just to mimic humans, but to outsmart their synthetic counterparts. We’ll break down how these systems work, why most current detectors fall short, and what it’ll take to build algorithms that can actually keep up with an adversary that learns at the speed of compute.

Whether you’re a tech leader worried about misinformation, a developer building software products, or simply someone curious about the next phase of digital truth, we’ll walk you through the breakthroughs, the challenges, and the ethical tightrope of AI fighting AI.

Let’s unpack the arms race you didn’t know was already happening.

What Makes Deepfakes So Hard to Detect?

Deepfakes are no longer pixelated parodies, they’re polished, persuasive, and practically undetectable to the human eye. Thanks to powerful generative AI models like GANs (Generative Adversarial Networks) and diffusion models, machines can now fabricate hyper-realistic audio, video, and imagery that mimic real people with eerie precision. These models don’t just generate visuals, they simulate facial microexpressions, lip-sync audio to video frames, and even replicate voice tone and cadence.

The problem? Traditional detection methods, like manual analysis or static watermarking, are simply too slow, too shallow, or too brittle. As deepfakes get more sophisticated, these legacy techniques crumble. They often rely on spotting surface-level artifacts (like irregular blinking or unnatural lighting), but modern deepfake models are learning to erase those tells.

In short: we’re dealing with a shape-shifting adversary. One that learns, adapts, and evolves faster than rule-based systems can keep up. Detecting deepfakes now requires AI models that are just as smart & ideally, one step ahead.

How AI-Based Deepfake Detection Works

If deepfakes are the virus, AI might just be the vaccine.

AI-based deepfake detection works by teaching models to recognize subtle, often invisible inconsistencies in fake media, things most humans would never notice. These include unnatural facial movements, inconsistencies in lighting and reflections, mismatched lip-syncing, pixel-level distortions, or audio cues that don’t align with mouth shape and timing.

To do this, detection models are trained on massive datasets of both real and fake media. Over time, they learn the “fingerprints” of synthetic content, patterns in the noise, anomalies in compression, or statistical quirks in how pixels behave.

Here are the key approaches powering this detection revolution:

- Convolutional Neural Networks (CNNs): These models excel at analyzing images frame-by-frame. They can pick up on minute pixel artifacts, unnatural edge blending, or inconsistent textures that hint at fakery.

- Transformer Models: Originally built for natural language processing, transformers are now used to analyze temporal sequences in video and audio. They’re ideal for spotting inconsistencies over time, like a smile that lingers too long or a voice that’s out of sync.

- Adversarial Training: This technique uses a kind of “AI spy vs. AI spy” model. One network generates deepfakes, while the other learns to detect them. The result? A continuous improvement loop where detection models sharpen their skills against increasingly advanced synthetic media.

Together, these methods form the backbone of modern deepfake detection, smart, scalable, and increasingly essential in the fight to preserve digital trust.

Top 5 Tools to Use for Deepfake Detection

As deepfakes become more convincing, a new generation of tools has emerged to help organizations, journalists, and platforms separate fact from fabrication. Here are five of the most effective and widely used deepfake detection tools today:

1. Microsoft Video Authenticator

Developed as part of Microsoft’s Defending Democracy Program, this tool analyzes videos and provides a confidence score that indicates whether media is likely manipulated. It detects subtle fading or blending that may not be visible to the human eye.

Best For: Election integrity, newsrooms, public-facing institutions.

2. Intel FakeCatcher

FakeCatcher stands out for using biological signals to detect fakes. It analyzes subtle changes in blood flow across a subject’s face, based on color shifts in pixels, to verify authenticity.

Best For: Real-time deepfake detection and high-stakes environments like defense or finance.

3. Deepware Scanner

An easy-to-use platform that scans media files and flags AI-generated content using an extensive database of known synthetic markers. It’s browser-based and doesn’t require coding skills.

Best For: Journalists, educators, and everyday users verifying suspicious videos.

4. Sensity AI (formerly Deeptrace)

Sensity offers enterprise-grade deepfake detection and visual threat intelligence. It monitors dark web sources, social platforms, and video content for malicious synthetic media in real time.

Best For: Large enterprises, law enforcement, and cybersecurity professionals.

5. Reality Defender

Built for enterprise deployment, Reality Defender integrates into existing content moderation and verification pipelines. It combines multiple detection models including CNNs and transformers, for high-accuracy results.

Best For: Social media platforms, ad networks, and digital rights protection.

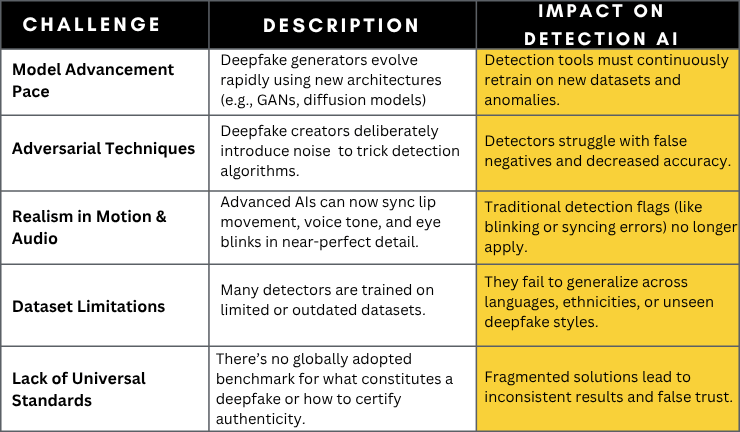

Challenges: Can AI Keep Up With AI?

Welcome to the AI arms race, where the attacker and defender are both machines, evolving in real-time.

Every time a new AI model is trained to detect deepfakes, an even more advanced generator model is right behind it, learning how to bypass that very defense. This back-and-forth escalation means today’s best detector can become tomorrow’s outdated relic. It’s a constant game of catch-up, driven by two competing forces:

Generative AI: Aiming to make synthetic content indistinguishable from reality.

Detection AI: Striving to find the digital fingerprints that reveal manipulation.

Here’s how this escalating battle plays out:

Enterprise Implications: Trust in the Age of Synthetic Media

Deepfakes aren’t just a consumer problem, they’re a corporate crisis waiting to happen. In an era where video can lie and audio can deceive, enterprises across industries must proactively defend trust, reputation, and security. Here’s how deepfake detection is becoming mission-critical for key sectors:

Media & Journalism: Safeguarding Credibility

Fake interviews, synthetic press clips, and impersonated anchors can spread misinformation faster than truth can catch up. Media organizations must adopt deepfake detection to validate sources, protect public trust, and prevent becoming unknowing amplifiers of AI-generated disinformation.

Legal & Compliance: Preserving Evidence Integrity

A deepfake video or audio clip can alter the outcome of legal proceedings. In law, where video depositions, surveillance footage, and recorded testimony are critical, deepfake detection is essential to verify digital evidence and uphold the chain of trust.

Cybersecurity: Stopping Impersonation Attacks

Deepfake voice phishing and impersonation scams are already targeting executives in social engineering attacks. Without real-time detection, businesses risk financial loss, data breaches, and internal sabotage. Cybersecurity teams must now treat synthetic media as a top-tier threat vector.

Human Resources: Preventing Fraudulent Candidates

Fake video resumes and AI-generated interviews can allow bad actors to slip through the hiring process. HR departments need detection tools to verify the authenticity of candidate submissions and protect workplace integrity.

Brand & PR: Protecting Corporate Reputation

A single viral deepfake of a CEO making false statements or of a fake brand scandal can damage years of brand equity. Enterprises need proactive scanning and detection systems to catch fake content before it goes public and disrupts stakeholder confidence.

What’s Next: Explainable AI, Blockchain, and Multimodal Detection

The future of deepfake detection won’t be won by a single algorithm, it will be shaped by ecosystems that combine transparency, traceability, and multi-signal intelligence. As deepfakes evolve, so too must the tools we use to fight them. Here’s what’s next in the race to outsmart synthetic deception:

Explainable AI: Making Detection Transparent

Most AI models operate like black boxes, you get an output, but no clue how it was reached. That’s not good enough for high-stakes environments like law, media, or government.

Explainable AI (XAI) offers clarity by showing why a piece of content was flagged as fake, pinpointing anomalies in pixel patterns, inconsistent facial features, or mismatched lip sync. This transparency not only builds user trust, but also aids in forensics and legal validation.

Blockchain: Verifying Media Authenticity at the Source

What if every photo or video came with a verifiable origin stamp?

Blockchain technology is emerging as a solution to authenticate digital media at creation. By embedding timestamps, device metadata, and cryptographic signatures on-chain, enterprises can verify whether content has been tampered with or remains original, no detective work required. Projects like Content Authenticity Initiative (CAI) and Project Origin are already piloting this approach.

Multimodal Detection: Combining Visual, Audio, and Text Signals

Today’s best detection tools don’t just look, they listen and read too.

Multimodal AI cross-analyzes video, voice, and text data to spot inconsistencies. For example, it can check if lip movement matches audio, if the spoken tone fits the scene, or if background sounds were artificially added. This holistic approach dramatically improves accuracy in identifying deepfakes, especially in complex or high-resolution media.

Wrapping Up: Fighting Deepfakes with Smarter AI, Built Ethically

As synthetic media becomes indistinguishable from reality, the question isn’t if your organization will face a deepfake threat, it’s when. The only way to stay ahead? Invest in AI that’s not just reactive, but resilient, explainable, and secure.

At ISHIR, we specialize in building enterprise-grade AI systems that can detect, flag, and learn from evolving threats like deepfakes. Whether you’re in media, law, HR, or cybersecurity, we help you:

- Implement custom AI detection models trained on real-world threat data

- Integrate multimodal detection frameworks for voice, video, and text verification

- Use Explainable AI to ensure transparency, traceability, and auditability

- Apply blockchain-based authenticity protocols to safeguard digital assets

Our solutions aren’t one-size-fits-all, they’re purpose-built to make your business unbreakable in the face of digital deception.

Is your organization ready to trust what it sees, hears, and shares

Build your deepfake defense stack with ISHIR’s AI expertise.

The post Can AI Be Trained to Spot Deepfakes Made by Other AI? appeared first on ISHIR | Software Development India.

*** This is a Security Bloggers Network syndicated blog from ISHIR | Software Development India authored by Eric Soon. Read the original post at: https://www.ishir.com/blog/199219/can-ai-be-trained-to-spot-deepfakes-made-by-other-ai.htm