Google Boosts LiteRT and Gemini Nano for On-Device AI Efficiency

The new release of LiteRT, previously known as TensorFlow Lite, introduces a streamlined API that simplifies on-device machine learning (ML) inference, enhanced GPU acceleration, and support for Qualcomm NPU (Neural Processing Unit) accelerators. This is aimed at making it easier for developers to utilize GPU and NPU acceleration without needing specific APIs or vendor SDKs.

"By accelerating your AI models on mobile GPUs and NPUs, you can speed up your models by up to 25x compared to CPU while also reducing power consumption by up to 5x."

The LiteRT release features MLDrift, a new GPU acceleration implementation that offers more efficient tensor-based data organization, context-aware smart computations, and optimized data transfer. This results in significantly faster performance for CNN and Transformer models compared to CPUs and even previous versions of TFLite’s GPU delegate.

LiteRT now supports NPUs, which can potentially deliver up to 25x faster performance than CPUs while consuming one-fifth of the power. To address the integration challenges of these accelerators, LiteRT adds support for Qualcomm and MediaTek's NPUs, making it easier to deploy models for vision, audio, and NLP tasks.

Developers can specify their target backend using the CompiledModel::Create method, which supports various backends including CPU, XNNPack, GPU, NNAPI, and EdgeTPU. The new TensorBuffer API eliminates unnecessary data copies between GPU and CPU memory, while asynchronous execution allows different parts of a model to run concurrently across different processors, reducing latency by up to 2x.

LiteRT can be downloaded from GitHub and includes sample apps that demonstrate its capabilities.

Image courtesy of Google Developers Blog

Simplified GPU and NPU Hardware Acceleration

The latest LiteRT APIs have significantly simplified the setup for using GPUs and NPUs. Developers can specify their target backend with ease. For example, creating a compiled model targeting GPU can be done with just a few lines of code:

// 1. Load model.

auto model = *Model::Load("mymodel.tflite");

// 2. Create a compiled model targeting GPU.

auto compiled_model = *CompiledModel::Create(model, kLiteRtHwAcceleratorGpu);

This ease of use is complemented by features that enhance inference performance, particularly in memory-constrained environments. The TensorBuffer API allows for direct utilization of data residing in hardware memory, which minimizes CPU overhead and maximizes performance.

The asynchronous execution feature enables concurrent processing across CPU, GPU, and NPUs. This parallel processing capability enhances efficiency and responsiveness, crucial for real-time AI applications.

For further exploration, refer to the documentation on TensorBuffer and asynchronous execution.

On-device Small Language Models with Multimodality

Google AI Edge has expanded support for on-device small language models (SLMs) with the introduction of over a dozen models, including the new Gemma 3 and Gemma 3n models. These models support text, image, video, and audio inputs, allowing for broader application of generative AI.

Developers can find these models in the LiteRT Hugging Face Community and utilize them with minimal code. The models are optimized for mobile and web use, with full instructions available in the documentation.

Gemma 3 1B is designed to operate efficiently on mobile devices, processing up to 2,585 tokens per second. The introduction of Retrieval Augmented Generation (RAG) allows small language models to be augmented with application-specific data without fine-tuning.

"The AI Edge RAG library works with any of our supported small language models."

With function calling capabilities, on-device language models can interactively engage with applications, allowing for a more dynamic user experience. Developers can reference the AI Edge Function Calling library to integrate these features into their applications.

Image courtesy of Google Developers Blog

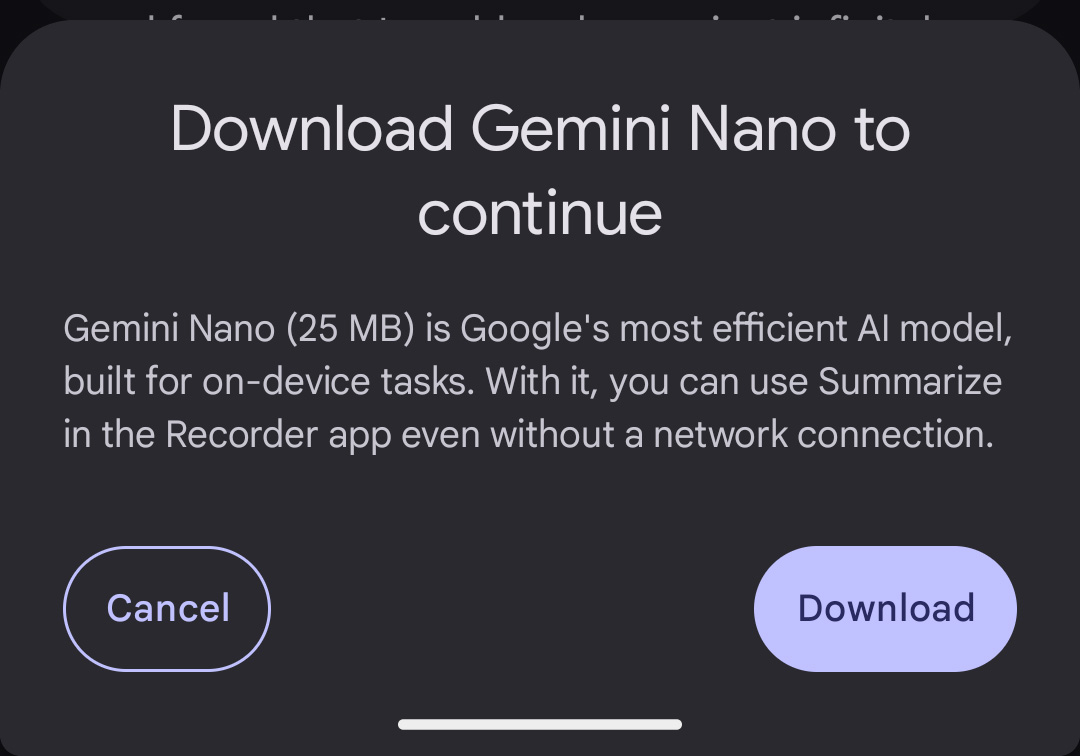

Google to Give App Developers Access to Gemini Nano for On-Device AI

Google is set to announce new APIs for ML Kit that will enable developers to utilize the capabilities of Gemini Nano for on-device AI. This update aims to simplify the integration of generative AI features within applications, allowing for functionalities such as summarization, proofreading, and image description without the need to send data to the cloud.

The Gemini Nano model has various versions, with the standard version (Gemini Nano XS) being around 100MB in size. As developers look to implement local AI features, the new APIs should make this process more efficient.

Developers interested in these updates can refer to the documentation for a comprehensive overview. This move is seen as vital for fostering a more consistent mobile AI experience, enabling capabilities that previously required substantial cloud resources.

Image courtesy of Ars Technica

For enterprises looking to implement secure SSO and user management, SSOJet offers an API-first platform featuring directory sync, SAML, OIDC, and magic link authentication. Explore our services or contact us at SSOJet to streamline your authentication processes. Visit our website at https://ssojet.com for more information.

*** This is a Security Bloggers Network syndicated blog from SSOJet authored by Devesh Patel. Read the original post at: https://ssojet.com/blog/google-boosts-litert-and-gemini-nano-for-on-device-ai-efficiency/