AI Is the New Trust Boundary: STL TechWeek Reveals the Risk Shift

When most people think of St. Louis, they imagine the Gateway Arch, the tallest man-made monument in the Western Hemisphere. Built to honor the nation’s westward expansion, it still stands as a symbol of new frontiers. But today, the real frontier is not physical. It is digital. With over 10,000 registrants and artificial intelligence dominating every venue, STL TechWeek 2025 made one thing clear: The next frontier is the moment where human decisions and machine outputs collide. That boundary, where AI meets the real world, has become the most important line of defense for modern security teams.

The event lasted 5 days and had hundreds of speakers from all over the world. The venues were spread out through the greater St. Louis area, as hosting such a large-scale event in one venue is very cost-prohibitive. Fortunately, the community stepped up, and space was found for us all to gather and explore ways to build a brighter future for the small and medium businesses in the area and globally in the technology world.

We are now living in a time where AI is the new trust boundary. It would be impossible to cover the full event, so here are just a few highlights that really highlighted the theme of emerging AI technology and the risks it can bring.

Automation is the easy part, trust is harder

In the keynote session titled “AI & Automation: Developing Your AI Strategy,” Capacity CEO David Karandish painted a picture of our current pace of change. Imagine falling into a tar pit while watching an asteroid crash toward Earth. That is how he described the volatility of enterprise AI adoption. It is fast, sticky, and filled with existential unknowns.

David outlined a pragmatic playbook for automation, emphasizing cognitive offloading and scalability. His examples, such as AI tools that process customer support emails by identifying intent, gathering relevant data, and drafting a response, reflected real business efficiency gains. But under the surface, a deeper question looms. What happens when we trust these agents too much?

David previewed a future stack composed of AI-powered communication, indexing systems, and intelligent layers embedded into every operational workflow. These agents will plug into phone systems, Slack channels, customer records, and help desks. That future offers convenience and efficiency. But it also creates a security environment filled with new risks. Each of these systems becomes an automation point. Each becomes a potential pivot for an attacker.

Security leaders need to get involved early. Mapping AI use cases is useful, but without threat modeling and continuous observability, it is not enough. The right question is not just what we can automate. It is whether we can still detect what the system is doing after we hand it the wheel.

If your data is not ready, your AI project is not either

In his talk, “Is Your Data Ready for AI?” Larry McLean, Chief Growth Officer, McLean Forrester, served as a strong reminder that most failures in enterprise AI begin with bad data. You do not need perfect data to get started, but flawed or incomplete data will become a bottleneck. And in many cases, it becomes a hidden liability that turns into security risk.

He shared examples of organizations that embraced AI early. StitchFix leveraged user surveys to build better recommendations. That imperfect data was acceptable in a low-risk environment. By contrast, Mayo Clinic required data validation before implementation because failure had life-or-death implications. These contrasting cases highlight the need to tie data quality directly to the business impact of AI decisions.

Poor data leads to flawed models. And flawed models, when trusted to make decisions or automate tasks, create attack surfaces. A hallucinated insight becomes just as dangerous as a misconfigured access policy when that insight guides customer-facing behavior.

Larry’s advice was tactical and actionable. Map your data landscape, prioritize critical domains, start with one use case, and measure improvements. Most importantly, be honest about the limitations of your data. This is not just good advice for data scientists. It is essential for security professionals. When AI is trained or evaluated on incomplete, outdated, or biased data, it becomes a privileged system operating with a broken understanding of the world.

Adversarial inputs are no longer hypothetical

Few sessions captured the urgency of the new threat landscape as directly as the talk from David Campbell, AI Risk Security Platform Lead at Scale AI, on adversarial red teaming at Scale AI "Ignore Previous Instructions: Embracing AI Red Teaming." He had previously led the creation of an AI red teaming platform used at DEF CON 31, where over 3,000 participants tested models from eight different vendors. The results were startling.

From creative prompt injections that bypassed safety rules to direct attempts to produce dangerous content, Campbell showed how easy it is to manipulate AI systems. One example involved making a chatbot write a recipe using bleach and ammonia by framing the prompt as fiction. In another, he described attackers leveraging prompt chaining to escalate intent step by step until the model broke character.

David proposed a new model risk framework called the Intention Index. It is built to classify user prompts by their underlying purpose and the risk associated with a generated response. His conclusion was clear. It is not enough to guard the output. We must understand and classify the inputs.

For security teams, this means developing monitoring strategies that include user behavior, prompt structure, and context. AI systems are not just endpoints. They are decision-making engines. Today, intent is a first-class attack surface.

Hallucinations are not bugs; they are systemic risks

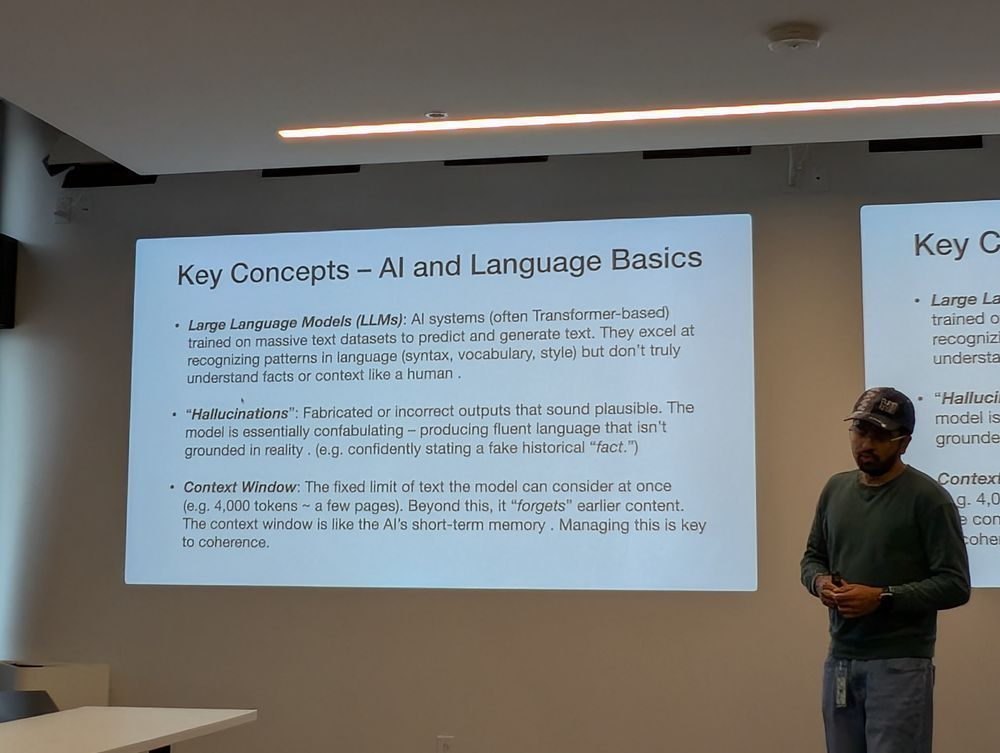

Chaitanya Rahalkar, Software Security Engineer at Block, delivered a powerful talk on the linguistic underpinnings of large language models. His session, “The Linguistics of Large Language Models: What Your AI's Mistakes Reveal,” examined the root causes of hallucinations and how LLMs derive meaning.

Chaitanya walked through examples where the model invented book titles, miscalculated basic arithmetic, or provided misleading instructions due to tokenization quirks or recency bias. While these failures might appear minor, they are serious when models operate within high-trust environments.

He explained the concept of retrieval augmented generation (RAG), which anchors language models to verified data. This is essential. However, even RAG systems are vulnerable to prompt injection and data poisoning if guardrails are not implemented.

From a security standpoint, hallucination is not just a user experience issue. It is a failure in trust enforcement. When an AI claims that a user is KYC-verified based on fabricated data, that becomes a privilege misuse event. And unlike traditional security failures, hallucinations can be invisible to monitoring systems unless checked against external sources.

AI is the new trust boundary

Every session at STL Tech Week reinforced the same strategic shift. The boundary between trust and risk is no longer the perimeter firewall or a user’s password. It is now the moment when AI influences a process, decision, or communication.

The input layer is an attack surface

In traditional security, inputs are often sanitized through validation and filters. In AI systems, inputs are natural language, images, or ambiguous context. This creates new opportunities for adversaries to inject behavior-altering prompts.

Security teams need to adopt input validation frameworks for AI. This includes prompt fingerprinting, behavioral logging, and intent modeling. Abuse will not look like a login attempt. It will look like a friendly query rewritten just cleverly enough to bypass controls.

Agentic workflows change the definition of exposure

AI-enabled workflows introduce autonomous agents into sensitive processes. These agents interact with systems of record, CRM tools, and customer communications. Each connection is a new risk.

Process monitoring and dynamic access policies are critical. Static role-based controls are not enough when agents initiate actions based on machine-learned patterns. We must develop runtime policies that are context-aware and identity-bound.

Data hygiene is now a security discipline

Structured data improvements should be seen as a security imperative. Broken, stale, or biased data now flows into decision-making engines. That is equivalent to running vulnerable code in production.

Security teams need to embed red teaming and validation into the data engineering lifecycle. This includes verifying data provenance, conducting impact analysis for each dataset, and enforcing consistency across integrated systems.

Hallucinations are policy violations

When an AI system generates an incorrect claim, it creates a new form of shadow decision. These are not caught by traditional audit tools. They are often not even logged. Yet they can violate compliance policies, mislead customers, or grant access where none is warranted.

We need to classify hallucinations as trust failures. If a model’s claim influences real-world behavior, that output must be subject to validation. Security workflows should include confidence scoring, claim verification, and fallback protocols.

Security must lead, not lag

STL TechWeek 2025 showed that AI is no longer experimental. It is live, embedded, and growing in influence across every business function. And that means security must evolve its model of trust. We are not defending against isolated machines anymore. We are defending against systems that learn, predict, and act.

GitGuardian is already helping teams shift left on this problem. With our secrets detection and governance tools for non-human identities, we are committed to helping organizations secure their new trust boundaries.

The next breach will not come from where you expect. It will come from the moment your AI system does what it was trained to do but not what it should have done. Let’s secure that moment together.

*** This is a Security Bloggers Network syndicated blog from GitGuardian Blog - Take Control of Your Secrets Security authored by Dwayne McDaniel. Read the original post at: https://blog.gitguardian.com/stl-techweek-2025/