How to Identify Suspect Temporal Patterns in Traffic Data

At DataDome, we use machine learning (ML) models to detect threats by leveraging the incredible amount of signals collected from our customers. In fact, our detection engine processes 3 trillion+ signals per day! The more data we can gather, the better our models function against both known and previously unknown threats.

One method we use to process data is a time series analysis, which identifies suspect behaviors over different time scales—from huge spikes to “low and slow” bots—by focusing on temporal (time-based) patterns in traffic.

Segmenting Traffic

To start, what time series are we talking about?

The web traffic we gather can be segmented into several sub-time series.

1. Gather Global Traffic Overview

First, each DataDome customer has their own specificities—they face particular challenges and various threats. Therefore, we analyze the traffic per customer: this is the first level of segmentation.

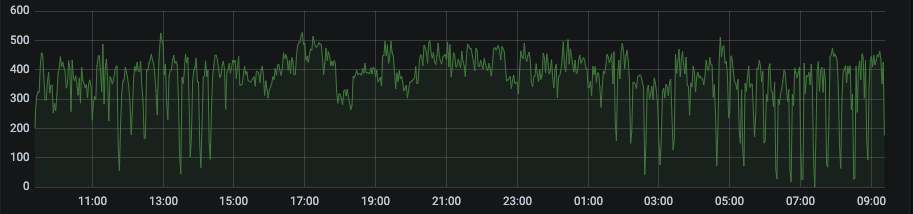

One day traffic for a specific DataDome customer.

2. Split Global Traffic by Chosen Category

For any given customer, we split the global traffic into multiple segments. The cardinality of the categorical field (i.e. its number of modalities) is essential to define how many sub-groups (time series) are considered. The higher the cardinality, the more time series we get. However, there is a trade-off: if the segmentation is too granular, it becomes much less useful.

In this article, we chose autonomous systems (AS) as the segmentation field. An AS is at least one (usually more) IP prefixes run by one or more network operators—all of which maintain a single, clearly defined routing policy. Examples of autonomous systems in the US are AT&T, Comcast, and Verizon.

One day of traffic for a specific customer, segmented by AS. For readability, only a subset of the AS is displayed.

3. Apply ML Model(s)

From here, several ML models are possible. We will present two of them:

- An unsupervised learning approach.

- A supervised learning approach.

Machine Learning Modeling

Unsupervised Learning Example

Unsupervised methods try to automatically find interesting activity patterns in unlabeled data. One unsupervised learning approach is clustering—or grouping similar time series together. By analyzing clusters and distances to clusters, we can identify suspicious patterns and/or suspicious time series.

The above example of a clustering pipeline is composed of 3 steps:

- Feature Extraction: Processing and aggregating raw signals from the time series. The explanatory variables—which can be statistical characteristics such as the mean, variance, minimum, maximum, etc. of each time series—feed the ML model.

- Clustering: Grouping similar time series together, which requires the choice of a similarity measure (euclidean distance, correlation, etc.) as well as the choice of an algorithm (K-means, DBSCAN, etc.)

- Visualization: Understanding the results can be much more difficult than it seems, because it requires reducing the dimensionality of our training set to be able to plot it on a plan. Specific algorithms exist to complete this task: PCA, t-SNE, etc.

A visualization example of potential results using the K-means clustering algorithm, with K set to 4.

Four clusters have been identified above. Two are composed of a majority of time series identified as bots, the two others as human. The proportion of each class can be used to assess the cluster label.

For each cluster, the closest time series to the cluster’s centroid—the imaginary “location” representing the center of the cluster—is displayed. The centroid is the point that is the most similar to other samples in the same cluster.

- One bot cluster corresponds to an “On/Off” pattern, while the other corresponds to a very periodical pattern.

A time series lies in between the two bot clusters. It shares similarity with both clusters: an “On/Off” periodical pattern.

Supervised Learning Example

Supervised learning uses labeled datasets to train algorithms to classify data or predict outcomes accurately. We introduce the bot/human label from the DataDome solution to split our AS time series into bot time series and human time series. The model will then evaluate each time series to determine if it’s a bot or a human time series.

Note that this approach will double the number of time series: bot time series by AS and human time series by AS.

Above, an example of a supervised pipeline composed of 3 steps:

- Features Extraction: Can be similar to clustering, processing and aggregating raw signals from the time series. The explanatory variables—which can be statistical characteristics such as the mean, variance, minimum, maximum, etc. of each time series—feed the ML model.

- Model Training: Learning the relationship between our features and the bot/human label from the training data includes linear models, ensemble methods, etc.

- Model Evaluation: Scoring a subset of time series for which we have the existing known label.

To stop more bots, we are interested in time series labeled as human by DataDome’s current detection system, but with a high score from the model. It can either be an error of the newly trained model or a misclassification of the existing detection engine.

A more in-depth investigation is required to understand where the error is coming from and mitigate it.

One day of traffic for a specific customer and AS (Chinanet) split by traffic label. In red, the traffic identified as bot by DataDome.

Patterns Blocked by the Model

Next, we combine the supervised modeling described above with Sliceline, which is the open-source package we released last year, designed for fast slice finding for ML model debugging. (A slice is a subspace of the input dataset defined by one or more predicates. You can read more about how Sliceline spots ML model errors on our blog and in our Sliceline documentation.)

By combining the supervised modeling described above with Sliceline, we are able to automatically generate blocking rules based on temporal patterns.

- From the time series, we identify two populations of AS:

- AS with human-like time series, where both the detection engine and the ML model agree on the human label.

- AS with bot-like time series, where the detection engine sees a human, but the ML model indicates a potential bot.

- Then, we switch from behavioral detection based on time series to signature-based detection. Instead of applying Sliceline on the AS time series and the features previously computed, we apply it to the signatures of the HTTP requests making up the time series.

The ML model learns to identify temporal abnormal behavior. It scores each AS to identify existing missed bot tendencies. Then Sliceline discovers what makes a request from our suspicious AS a bot request. That way, we take advantage of the great diversity of signals at the request mesh: user-agent, method, host, etc.

Once the ML model learns what makes for suspicious temporal behavior, we end up with a blocking rule like this: Autonomous System = "AMAZON-02" & User-agent = "Mozilla/5.0 (X11; Ubuntu; Linux i686; rv:24.0) Gecko/20100101 Firefox/24.0" & Method = "POST"

We proceed to generalize several suspicious patterns we identified. To name a few:

- Flat Traffic: In general, human traffic respects a day/night pattern. Some bot traffic will appear more “flat” over time.

- Periodic Traffic: Traffic peaking during short periods of time looks like an automated program.

- Sudden Spike: It might be an attack or a highly anticipated release, depending on the customer.

The following graphs display the number of requests blocked over time by different blocking patterns, all generated by our time-series-based ML models.

Flat Traffic

Examples of bot time series blocked by our AI because of too-flat traffic:

Periodic Traffic

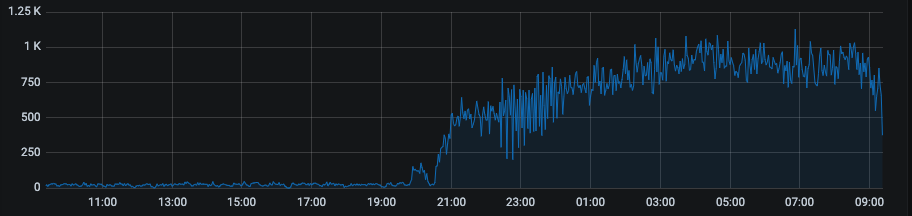

Examples of bot time series blocked by our AI because of short-period periodic traffic:

Sudden Traffic Spikes

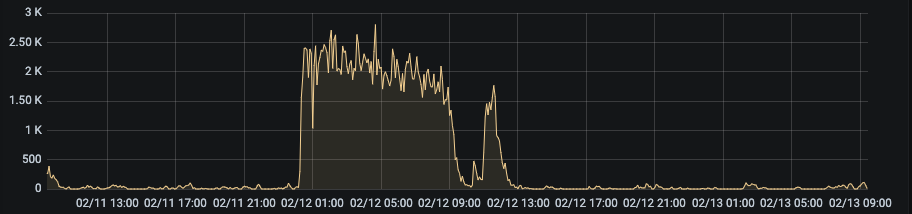

Example of bot time series blocked by our AI because of sudden high peaks of traffic:

On/Off Traffic

The ML model managed to block a fourth pattern—an “On/Off” pattern directly linked to the quality of the segmentation. Examples:

Combinations of Patterns

The patterns presented above can be observed simultaneously.

“On/Off” + “Spike” Patterns

“Flat” + “On/Off” Patterns

Periodical “On/Off” + “Spike” + “Flat” Patterns

On the last example above, we can see that the bot is making very few requests at night. During the day, it switches to flat traffic. But it does not preclude peaks of requests, as on the night of February 12 to 13 at 1:00 AM.

Conclusion

In this article, we presented some results of our current ML models dedicated to time series analysis. By focusing on specific segmentation fields, we are able to automatically identify suspicious temporal patterns and block the traffic.

We used artificial intelligence (AI) to address several related problems at the same time. Instead of having separate models to identify flat traffic, periodical traffic, etc., we designed one single model to identify all bot temporal patterns. To learn more about DataDome’s use of ML and AI to protect our customers from malicious bots and online fraud, browse more of our threat research insights.

*** This is a Security Bloggers Network syndicated blog from DataDome authored by Antoine de Daran, Cybersecurity Data Scientist. Read the original post at: https://datadome.co/threat-research/identifying-suspect-temporal-patterns/