The guide to analyzing Kubernetes runtime detection alerts using Amazon Athena

Introduction

Lightspin created a public repository with common use cases to simulate unusual/malicious activities within the Kubernetes cluster. The malicious activities include attempts to container escapes, reconnaissance actions, and cryptocurrency mining. All presented use cases are detected by the Lightspin Kubernetes Runtime Protection solution that triggers alerts with full information regarding suspicious activity. You can stream the alerts to an S3 bucket by configuring an “AWS S3” integration in Lightspin’s platform. In this post, we will guide you through the full process – from configuring an S3 bucket integration, to running a live simulation inside a Kubernetes cluster, to loading the alerts from the S3 bucket to an Amazon Athena table and querying the results.

Before you begin

You need to have a Kubernetes cluster, and the kubectl command-line tool must be configured to communicate with your cluster. This cluster should also be connected to the Lightspin platform, and the “Runtime Protection” option should be enabled. In addition, you need to connect an AWS account to the Lightspin platform and ensure you have access to Amazon Athena and S3 within this account.

Disclaimer: Please note that working through this document will incur charges against your AWS account related to usage in Amazon Athena service and AWS S3 storage.

Configure S3 bucket integration in Lightspin’s platform

If the AWS account is not already configured with an AWS S3 integration, follow the steps below to create one. You can create a new S3 bucket or use an existing one for the target S3 bucket.

- Open the Lightspin platform.

- Go to Settings and open Alerts & Notifications.

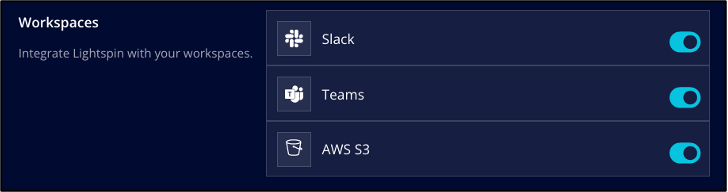

- In the Workspaces section, enable AWS S3 integration.

- For AWS S3 Bucket Name insert the target S3 bucket name. For AWS Account choose the AWS account where the S3 Bucket is located.

- Click the Test S3 Bucket Connection button to ensure that the connection is successful.

- Click Save.

- In the Alert Channels section, choose Create Alert Channel.

- For Name, enter a channel name for the new channel.

- For Type, choose Kubernetes Runtime.

- For AWS S3 Bucket, choose the S3 bucket name that you configured above.

- For Minimum Severity, choose Medium.

- Ensure the Enable option is checked.

- Click Save.

Congratulations! You have an S3 bucket integration in Lightspin’s platform.

Simulate malicious activity and trigger events in the Kubernetes cluster

Now, when we have set the S3 bucket integration, alerts from the Kubernetes runtime protection are streamed into the lightspin/k8s_runtime_events folder within the bucket. We will run simulations from lightspin-k8s-attack-simlutions repository to trigger those events inside the Kubernetes cluster. In this repository you can find the instructions to install and use this tool. We suggest you execute some simulations to create more than one event in the S3 bucket.

In the example below, you can see the execution of the cryptocurrency mining simulation inside the cluster.

The triggered alerts’ events are added to the S3 bucket soon after the execution.

Create the Athena table

Amazon Athena provides a convenient and quick way to query data from S3 using SQL queries. With Athena you can query large datasets, get results in seconds, and pay only for the queries you run. In the following section, we will create a table in Athena that will contain the runtime events from S3.

- Open Athena console.

- If this is your first time visiting the Athena console in your current AWS Region, choose Explore the query editor to open the query editor. Otherwise, Athena opens in the query editor.

- Choose View Settings to set up a query result location in Amazon S3.

- In the Settings tab, choose Manage.

- For Manage settings, enter under Location of query result the path to a bucket where the query results will be stored. The bucket should be stored on the current AWS region (Suggestion: do not choose the same S3 bucket to which you save runtime events).

- Choose Save.

- Choose Editor and execute the following query to create the table. Change the BUCKET-NAME placeholder to the name of your S3 bucket that contains the runtime events.

CREATE EXTERNAL TABLE IF NOT EXISTS runtime_events (

`container.id` string,

`evt.time` string,

`k8s.ns.name` string,

`k8s.pod.name` string,

`proc.cmdline` string,

`proc.pid` int,

`cluster_id` string,

`rule_name` string,

`description` string,

`severity` string,

`related_cves` string

)

ROW FORMAT SERDE 'org.openx.data.jsonserde.JsonSerDe'

LOCATION 's3://BUCKET-NAME/lightspin/k8s_runtime_events/'

The image below shows the query in the Athena editor:

The columns in the runtime_events table are defined as follows:

- container.id: The ID of the container where the event happened

- evt.time: The time when the event happened

- k8s.ns.name: The pod namespace

- k8s.pod.name: The pod name

- proc.cmdline: The executed command line triggered the event

- proc.pid: The ID of the process generating the event

- cluster_id: The cluster ID on Lightspin’s platform

- rule_name: The name of the rule that detected the event

- description: The description of the rule that detected the event

- severity: what is the severity of the event

- related_cves: If there are any CVEs that are related to this event

Execute the command below to get a couple of rows to see what the data looks like:

SELECT * FROM runtime_events limit 10;

The image below shows an example output of the data in the runtime_events table:

Analyze runtime events using Athena queries

After we have created the runtime_events table, we can query the data and search for high priority alerts or additional insights. Let’s have a look at some examples for interesting queries.

Query 1: Find which rules are triggered the most

SELECT rule_name, severity, COUNT(rule_name) as count_rules FROM runtime_events GROUP BY rule_name, severity ORDER BY count_rules;

Example output:

Query 2: Search for High severity alerts’ events that occurred within the cluster

SELECT rule_name, "proc.cmdline", "evt.time" FROM runtime_events WHERE severity='High' ORDER BY "evt.time" DESC limit 8;

Example output:

Query 3: Looking for a specific rule

SELECT * FROM runtime_events WHERE rule_name='Detect Outbound Connections To Common Miner Pool Ports';

Example output:

Conclusion

According to Cloud Native Computing Foundation’s respondents in a 2021 study, 96% of organizations are either using or evaluating Kubernetes – a record high since their surveys began in 2016. Kubernetes has rapidly become one of the most widely used services for managing organizations’ containerized workloads and services. As such, it is essential that organizations improve their ability to secure and protect their environments. In this post, we presented how to run an active test that simulates attacks/malicious activity within the Kubernetes cluster. Then, we followed the steps for creating an S3 bucket integration to stream and store the runtime alert’s events from Lightspin platform. Finally, we used Amazon Athena to create a table that loads the data from the S3 bucket and analyzed the results with SQL queries. As Kubernetes usage across regions and organizations continues to increase, it is vital that organizations can put in place best practices and approaches to ensure advanced protection.

![]()

*** This is a Security Bloggers Network syndicated blog from Lightspin Blog authored by Ori Abargil. Read the original post at: https://blog.lightspin.io/the-guide-to-analyzing-kubernetes-runtime-detection-alerts-using-amazon-athena