ChatGPT Is Here, and So Are Its Risk Management Challenges

ChatGPT promises to transform all sorts of corporate business functions, and perhaps in the fullness of time those rosy predictions will prove true.

The risks around ChatGPT, on the other hand, are already here — and compliance officers need to gird for battle against those risks immediately.

Why? Because ChatGPT (and similar generative AI tools following close behind) doesn’t just address one specific business process in the way that a spreadsheet, a payments app, or photo editing software might. ChatGPT is a breakthrough in general technology that supports all business processes. In that sense, it’s more akin to the rise of social media, mobile devices, or even the Internet itself.

In many ways that’s great; ChatGPT could be a godsend for tasks such as translation, content writing, coding, and more. But the security, compliance, and business risks that come along with such a powerful technology are just as far-reaching. Compliance officers have an enormous amount of work to do if your company wants to avoid being overwhelmed.

Every Risk Everywhere All at Once

The most immediate challenge for compliance officers will simply be to understand what all those ChatGPT risks are. They’ll come in different forms and from all directions. For example, you’re likely to encounter:

Internal security risks

as employees use ChatGPT or similar applications to, say, write software code. If they deploy that code without careful testing first, it could introduce vulnerabilities the IT security team doesn’t know about.

External security risks

because attackers will use ChatGPT to write malware, business email compromises, more convincing and grammatically correct phishing attacks, and similar threats. As it becomes easier to generate and deploy those attacks, they’ll become more common.

Compliance risks

if employees use ChatGPT in ways that might violate regulatory standards. For example, they might load personally identifiable information about customers into ChatGPT to write a report (which is a privacy violation) or use the app to summarize unpublished financial data (securities law violation).

Operational risks

because as wondrous as ChatGPT is, it still gets many basic facts wrong. If an employee uses the app to write a report that contains errors, and that report then goes to senior management as part of a critical business decision, many problems could ensue.

Strategic risks

as your company and your competitors all search for the opportunities that ChatGPT brings, too. What if you embrace ChatGPT too quickly, and its risks outweigh the benefits? What if you embrace it too slowly, and competitors achieve dazzling new performance? How do you find the right path forward, and the right moment to take it?

What if you embrace it too slowly, and competitors achieve dazzling new performance? How do you find the right path forward, and the right moment to take it?

To make matters even more complicated, every other business is making these same assessments about ChatGPT. Perhaps their use of generative AI will create new opportunities for you and vice-versa. Or maybe their use of these tools will put you at some disadvantage.

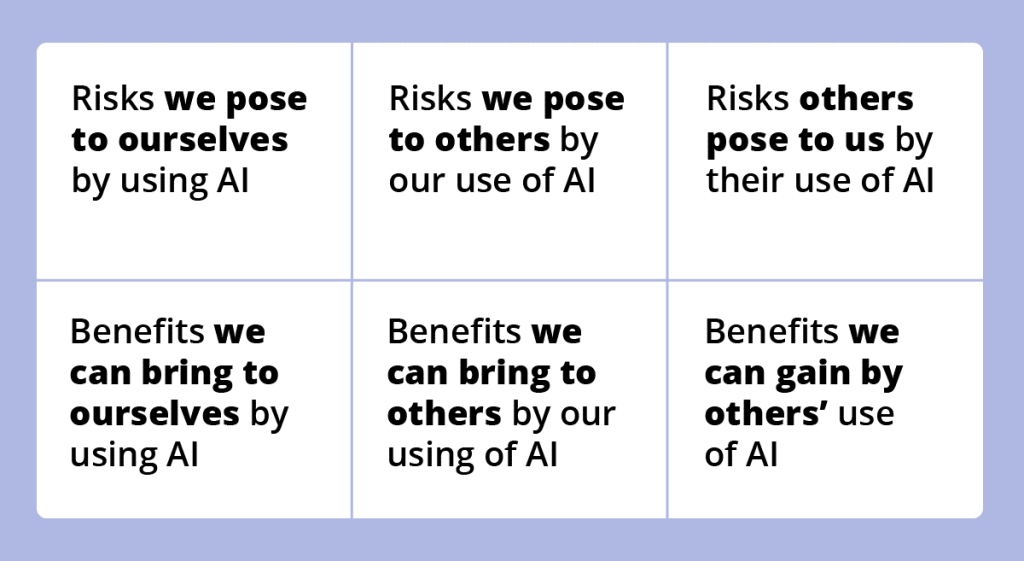

To bring order to that chaos, we can organize ChatGPT’s risks and opportunities into a matrix. It would look something like this:

To harness the power of ChatGPT as fully and wisely as possible, companies could assemble a cross-enterprise group that can work its way through the above matrix — defining new risks along the way and logging those risks in a risk register.

Clearly the CISO should be a part of that group, along with the head of legal, regulatory compliance, and operating teams that might benefit (or be disrupted) from using ChatGPT. At the end of the process, CISOs should have a list of risks that ChatGPT might introduce or amplify. CISOs can then develop strategies to address those risks. Other risk management functions (legal and regulatory compliance, for example) could do the same for new risks that they’ll need to mitigate, too.

Fighting ChatGPT Risk With Policies

Once companies develop a list of risks that ChatGPT might bring to your business, the next step is to implement policies to reduce those risks by either adopting entirely new policies or (more likely) updating existing policies to assure that they still work in the era of ChatGPT.

For example, we know that threat actors will try to use ChatGPT to sharpen their attacks against businesses. In that case, you might want to revisit your anti-fraud policies. You could consider requiring additional approvals before executing a wire transfer overseas, or changing the type of approval: phone or in-person authorization, rather than electronic approvals only.

You’d also need to consider the privacy obligations you have with customer data, and then tailor your ChatGPT usage policies to maintain your privacy compliance. Healthcare and education organizations, for example, might want to ban employees from entering customer data into ChatGPT since that might constitute a privacy violation.

Companies might run into issues where they may have a legal or ethical responsibility to disclose that they have used ChatGPT in their practices. For example, say you use ChatGPT to conduct background research on job applicants. Do you disclose that fact early, perhaps with a disclaimer on your website saying “The company may use AI to assist in its hiring processes”? Or do you not disclose your use of AI at all?

In any case, you’ll need to understand what your existing compliance or security risks are and the current policies you have to manage those issues. Then, you’ll need to adopt new ChatGPT-oriented policies to address the new risks you’ve identified — and you’ll need to document all that effort to have an audit trail at the ready if auditors, regulators, business partners, or the public ever ask for it.

From Risk Response to ChatGPT Governance

Generative AI is here to stay, and eventually it will become a tool used across the enterprise. This means that assessing its risks and responding with new policies is only the beginning. Ultimately, CISOs will need to work with senior management and the board to govern how this technology is woven into your everyday operations.

Several implications flow from that point. First and most practically, CISOs will need to deploy governance frameworks for artificial intelligence. The good news is that several such frameworks already exist, including one published by NIST earlier this year and another released by COSO in 2021. Neither of them are geared toward ChatGPT specifically, but they do help CISOs and other risk managers understand how to start building processes to govern ChatGPT or any other generative AI app that comes along.

More good news is that GRC tools already exist to help you put those frameworks to use. The basic exercise here is to map the AI frameworks’ principles and controls to those of other risk management frameworks you might already use and to controls that already exist within your enterprise. Then you can get on with the work of implementing new controls as necessary and creating an audit trail to show your work.

In the final analysis, senior management and the board will be the ones who decide how to use ChatGPT within your enterprise because ChatGPT remains only a tool to help people achieve their objectives. The CISO’s role is more about how to use those tools in a risk-aware manner and meet your regulatory obligations at the same time.

Then again, with all the risks and rewards generative AI promises, that’s plenty enough work already.

The post ChatGPT Is Here, and So Are Its Risk Management Challenges appeared first on Hyperproof.

*** This is a Security Bloggers Network syndicated blog from Hyperproof authored by Matt Kelly. Read the original post at: https://hyperproof.io/resource/chatgpt-risk-management/