The Role of Ethics in Cybersecurity Studies

Nobody wants to be a proverbial guinea pig; least of all, developers donating their time and energy to making the world a better place. You’d think with all the recent discussion about consent, researchers would more carefully observe ethical boundaries. Yet, a group of researchers from the University of Minnesota not only crossed the line but ran across it, screaming defiantly the whole way.

In response, the Linux Foundation, which is the core of the open source community, took the unprecedented step of banning the entire University of Minnesota from contributing to the Linux kernel. The open source community is built upon the principles of trust, cooperation and transparency. This group donates time and high-value industry skills to create, maintain and improve free and widely adopted software in the interest of making technology more accessible. Linux is a widely used operating system found in everything from servers to cell phones.

Yet, a group of researchers abused this community’s trust by not only sneaking vulnerabilities into the code base but then effectively bragging about it in the name of research. In February 2021, a team from UMN published a research article outlining how they systematically and stealthily introduced vulnerabilities into open source software. They did this through comments that appeared beneficial but, in actuality, introduced critical vulnerabilities. Though stating it targeted open source as a whole, much of the researcher’s attention was aimed at the Linux Kernel. The Kernel is the foundation of the operating system and manages the interactions between hardware and applications.

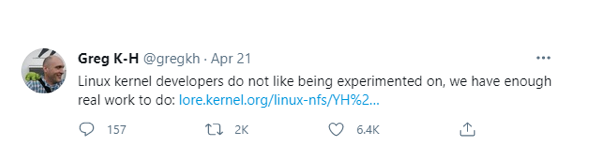

“Experiments” like this, without informed consent, call into question the very ethics that even the most novice cybersecurity professionals learn. Moreover, after publishing the paper, the researchers continued this non-consensual test until they were called out publicly in the Linux Kernel mailing list. Reviewers had identified that numerous bad patches continued to come in. When confronted, the researchers dismissed the concerns; claiming the code recommendations came from a static analyzer that they were still developing.

The Kernel group responded, pointing out that “They obviously were not created by a static analysis tool that is of any intelligence, as they all are the result of totally different patterns, and all of which are obviously not even fixing anything at all.”

In light of what appears to be blatant deceit and unwillingness to take responsibility, the group had no choice but to draw a hard line in the sand. They pointed out that this was not consensual and that testing tool data like this is generally explicitly stated. This specific research team’s history of unrepentant abuse and the failure of escalations to their University to remediate it left the Kernel group with little choice. They banned the entire University from future contributions and are working to remove all prior submissions.

This whole situation revolves around the concepts of ethics within the cybersecurity profession. When conducting cybersecurity research, how do you seek consent when consent might alter the findings? Do the ends justify the means?

The Importance of Ethics in Cybersecurity Research

Given that I can (and will) list ways the researchers in the above situation could have behaved more ethically and still managed their research, it’s evident, in this case, that what was missing is an understanding of the crucial role that ethics plays in cybersecurity.

They Had More Ethical Options

One option is that the research team could have started a new open source project they themselves owned and managed. As the owners of the project, they oversee the final commit process. This would have allowed them to inject subversive code for general review, along with what others submit, to see what survived the process, as was the goal of their research. Once it hit the final approval gate, they could have removed the bad submissions and prevented anything dangerous from going live.

Alternatively, they could have worked with the Linux Foundation to conduct this research as a controlled experiment. Getting the consent of the Foundation would mean that the admins knew which submissions were subversive, allowing them to be filtered before going live. While both of these options would have reduced the risk of a vulnerability making through to a live product that people depend on, they still fall into a gray area of ethics; it amounts to a social experiment on individuals who donated their time and skill in good faith. Either of these would have been, undoubtedly, better than the path they chose, but still are not completely ethical. Experimenting on or with human behavior is always a tricky proposition.

As it is, the path they chose and the reasoning behind it is reminiscent of the early days of technology, when the line between good-faith security testing and cybercriminal activity blurred. This was the impetus for legislative intervention and a code of ethics within the hacking/cybersecurity community. Ethics are the critical line that differentiates a white hat hacker from a black hat bad actor.

Consent in Security

To differentiate ourselves from the criminal element and so that we’re taken seriously, the cybersecurity community openly embraces an ethical approach to all our activities. Explicit examples of this are published by SANs and EC-Council; the latter explicitly mentions getting a third party’s consent for certain research and investigative activities. This is vital, because even in the best circumstances, security researchers conducting their jobs responsibly and appropriately may be forced to defend themselves and their actions against legal issues. The case of the CoalFire team in 2019 demonstrated how this can happen.

Finding security vulnerabilities is essential to the development of security products and processes, because the last thing anyone wants is for the criminals to reveal our weak points via an attack. Yet, ignoring consent risks painting research as a cybercrime. Banks definitely want to know if there are weak points in their security; yet, they aren’t favorably disposed to the stress and expense of an incident. No one wants their security team mobilized, customer accounts locked and systems pulled offline because a random ‘researcher’ was testing.

This is the reason that penetration testing companies exist. The role of a penetration tester is clearly defined both in scale and scope. When they are hired, the parameters and limitations of the experiment or test(s) they are to perform are clearly defined. There is even a solid ethics component throughout the education curriculum and training necessary to become a certified pen tester.

Ethics in Research

Much like in the realm of security, the scientific community also subscribes to a set of ethical guidelines about how they conduct their research. Specifically, at UMN, they have an Institutional Review Board (IRB), which outlines the type(s) of research that is acceptable with human subjects and which is intended to review and approve – or deny, if they don’t conform to ethics guidelines – studies with human subjects.

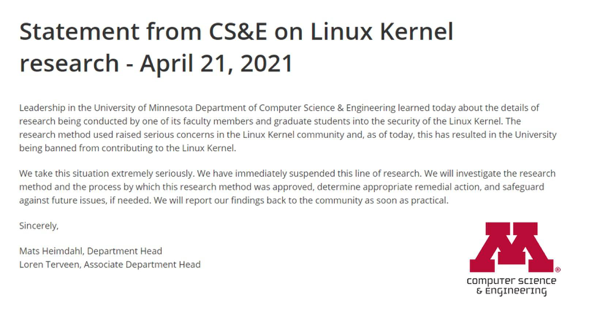

Apparently, the IRB at UMN does not consider the Linux Kernel developer community to be human; according to the research paper, they provided an exemption to the team. I am not sure how researching the way a development team reacts to subversive behavior is not a study of humans or of human behavior. However, my expertise is in cybersecurity for a reason. We also should consider the possibility that the IRB at UMN might have been misled. The fact that UMN has recently launched an investigation seems to support that possibility.

Regardless of the IRB decision, the researchers still knowingly chose to conduct a study on unwilling participants. This raises the question of whether they felt the end results in this research justified the questionable means of accomplishing it. Throughout the history of science, this has been a long-running debate. IRB’s were put in place at institutions to prevent the kind of abuses that can occur without consent, as did occur in cases such as that of the Tuskegee Airmen or the atrocities of holocaust experimentation. While this incident of non-consensual experimentation pales next to these historic cases, the fact is that it’s a slippery slope from one to the other.